As this journey continues, I figured it was time to address the scary ghost in the room, multitenancy. If I am being honest, this is where is pend the mass majority of my design hours. From these design decisions it has massive impacts on component design, for example, how many edges, how many T0/T1, how many L3 GWs, how many vNETs, etc. In this article, I will compare and contrast the different multitenant design options and impacts of VMware NSX and Azure Stack SDN.

Apologies in advance for the length of this one but it is a heavy topic 🙂

Feel free to check in on this series:

What is Multitenancy in a Software Defined Networking world?

Prior to Software Defined Networking solutions, physical datacenters were limited to broadcast domains with up to 4096 on each device.

With the emergence of VXLAN (Virtual Extensible LAN) as a popular overlay protocol VXLAN has allowed you to overcome the above limitations.

Multi-Tenancy infrastructure is a game-changer in the world of cloud computing and onprem Software Defined Networking. It allows multiple tenants to share the same computing and networking resources within a data center, which greatly reduces the physical footprint of these architectures. This, in turn, lowers the total cost of ownership as dedicated hardware is unnecessary. With Multi-Tenancy infrastructure, businesses can save money without sacrificing performance.

Below, I will cover the different multitenant options you have with NSX and Azure Stack SDN.

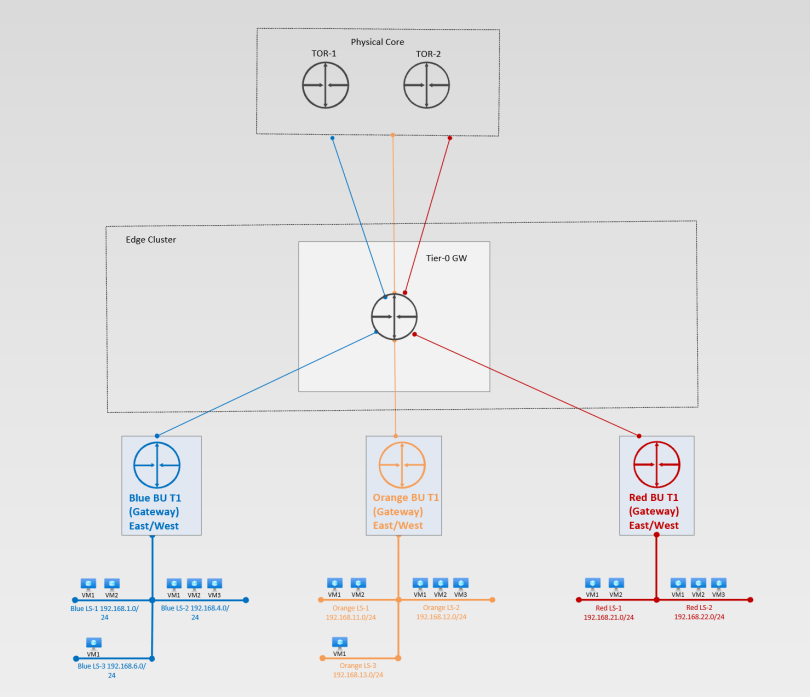

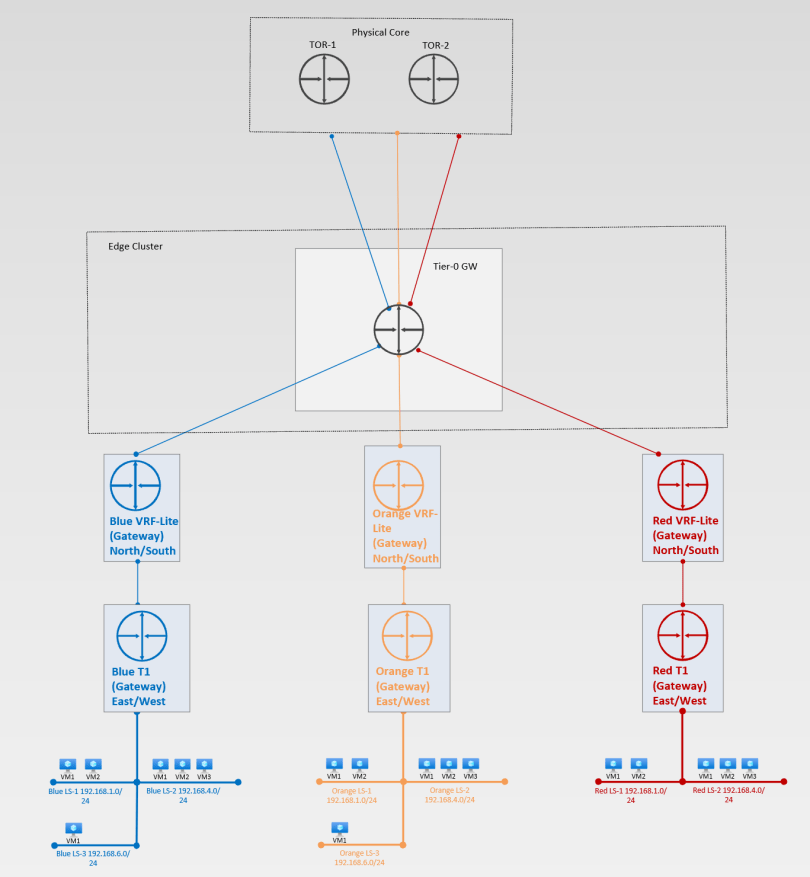

NSX Multiple Tier-1 Gateways per Tenant

Tier-1 router becomes the first hop router and separates tenants via dedicated Tier-1 GW. This is where the tenants are separated. This is the preferred architecture when the desire is to have ease of management, resource overhead, and least complex design/

Benefits:

- Tenant isolation at T1 GW level

- Can have up to 1k T1s GW per T0 GW

- Eliminates dependency from physical network when a new tenant is provisioned in NSX

- Does not require additional compute resources

Downsides:

- Cannot have overlapping IP space – requires Dedicated T0 or VRF-Lite

- Management overhead to monitor existing IP space for new workload creation

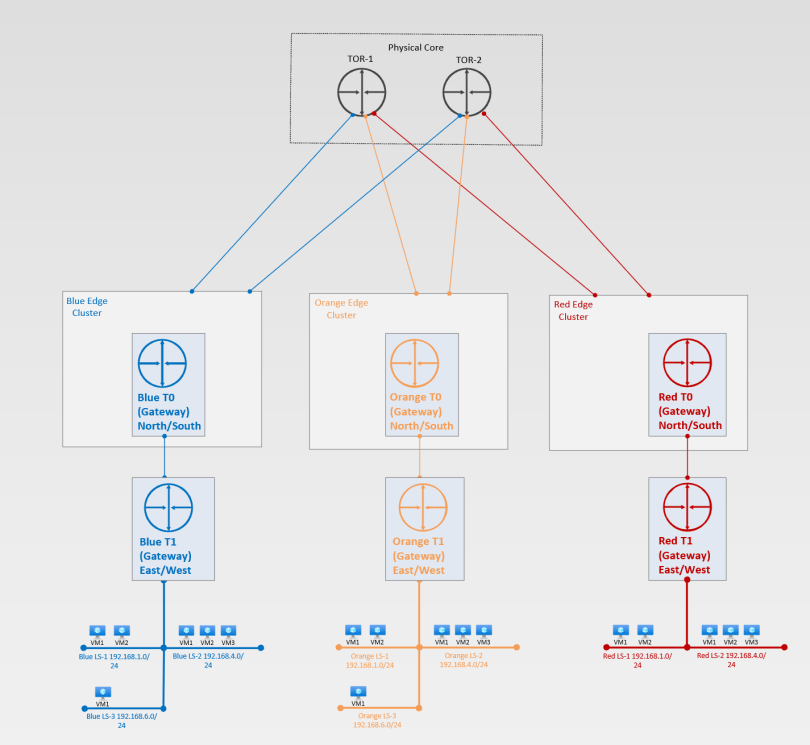

Dedicated Tier-0 Gateways per Tenant

Each tenant is given their own dedicated Tier-0 GW allowing for full isolation from other overlay networks in the environment. This is how one would build out a Cloud Service Provider (CSP) architecture onprem leveraging NSX. This would effectively allow you to run the same CIDR ranges in each environment on top of the same shared hardware.

Benefits:

- Allows for isolated logical networks at T0 GW level

- Allows for overlapping IP to be used in each dedicated T0 level without conflicts

- Enables CSP architecture

- Great design for merges and acquisitions

Downsides:

- A single T0 GW can only be configured per edge cluster.

- This requires an edge cluster per isolated T0 GW deployment.

- If you require three (3) dedicated edge clusters (2 x Edge VMs per cluster) with recommended large edge VMs (32G x Memory, 8 x vCPU, 200GB storage per VM)

- The above total compute resources would be 192G x memory, 48 x vCPU, and 1.2TB (No I am not a fan of thin provisioning storage for edge VMs) of storage.

Dedicated VRF Lite Gateway per Tenant

VRF Lite allows you to instantiate isolated routing and forwarding tables within your T0 GW. VRF Lite is supported with a Tier-0 gateway as a virtual Tier-0 gateway associated with the parent Tier-0 gateway.

All VRFs associated with the T0 GW inherit the following configuration from the parent T0 GW

- Edge cluster

- HA mode (Active/Active or Active/Standby)

- BGP local AS number

- BGP graceful restart settings

VRF Lite configuration that is independent of the parent T0 GW

- External interface

- BGP state and neighbor

- Routes, route filter, redistribution

- Firewall, NAT

Benefits

- Provides Dedicated T0 benefits as stated above on a shared parent T0 GW

- A single Tier-0 logical router can scale up to 100 VRF.

Downside

- Edge uplink bandwidth is shared across all of the T0 GWs and child VRF Lite GWs

- T0 can be A/A or A/S, if a single VRF Lite must have a stateful service, the entire parent T0 GW must be in a/s configuration

- Networking objects such as IP addresses, VLANs, BGP configuration, and so on, must be configured for each VRF which can cause some scalability challenges when the number of tenants is quite significant.

- Requires EVPN to get around this which adds it’s own complexity and overhead

- Maintaining many BGP peering adjacencies between the NSX-T Tier-0 and the Top of Rack switches (ToR) / Data Center Gateways will increase the infrastructure operational load.

- Requires EVPN to get around this which adds it’s own complexity and overhead

- No inter-SR routing for VRF routing instance

- NAT doesn’t work for inter-VRF traffic (with inter-VRF static route)

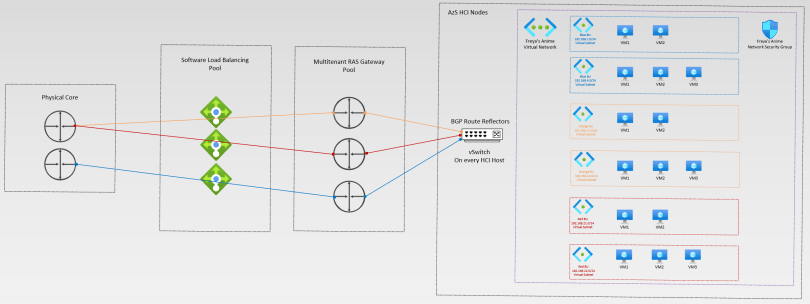

Azure Stack SDN Virtual Subnet per Tenant

Inside Azure Stack SDN you have the concept of a Virtual Network leveraging VXLAN for your encapsulation layer to isolate your networks. Inside the vNET is the concept of a Virtual Subnet, which enables you to segment the virtual network into one or more subnetworks and allocate a portion of the virtual network’s address space to each subnet.

In the above design, we would have a single vNET with a dedicated subnet per tenant. This would closely model the

NSX Multiple Tier-1 Gateways per Tenant mentioned above.

Benefits:

- Tenant isolation at subnet level

- Multiple scaling options depending on how you carve up the Virtual Network CIDR

- Eliminates dependency from physical network when a new /subnet tenant is provisioned in SDN

- Does not require additional compute resources

Downsides:

- Cannot have overlapping IP space – Dedicated Virtual Network

- Management overhead to monitor existing IP space for new workload creation

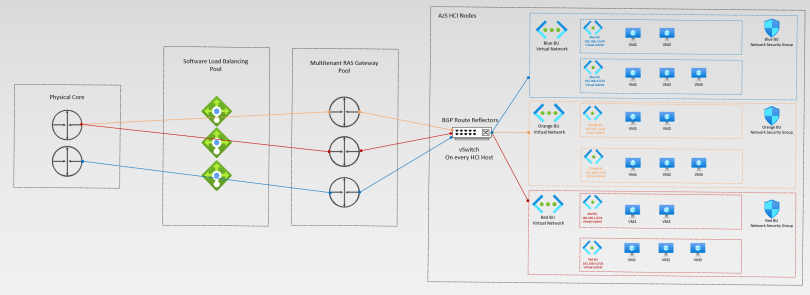

Azure Stack SDN Virtual network per Tenant

Inside Azure Stack SDN you have the concept of a Virtual Network leveraging VXLAN for your encapsulation layer to isolate your networks. Inside the vNET is the concept of a Virtual Subnet, which enables you to segment the virtual network into one or more subnetworks and allocate a portion of the virtual network’s address space to each subnet.

The major benefit is that you could have the Red tenant and the Blue tenant living on the same shared hardware even if they are different departments within the organization. Neither the Red or Blue tenant would have access to the others networks or resources. On Azure Stack HCI allows for a public cloud experience for a single organization onprem.

In the above design, we would have a single vNET dedicated per tenant. With this option we would carve off as many Virtual Subnets as required for your tenant workloads. This would closely model both the NSX Dedicated T0 Architecture as well as the VRF Lite Architecture.

Benefits:

- Allows for isolated logical networks at Virtual Network level

- Allows for overlapping IP to be used in each dedicated Virtual Network level without conflicts

- Enables CSP architecture

- Great design for merges and acquisitions

- Does not require additional compute resources to create a new Virtual Network

Downsides:

At this point in time I have not discovered a downside to vNETs. They do not require additional compute resources or threaten bottle necks on NICs no matter how many you generate. They offer full Cloud Service Provider (CSP) capabilities onprem. Even though this series is based on Azure Stack HCI underneath, SDN also runs in windows 2025.

Additional design considerations:

Within Azure SDN you have the ability to have multiple types of gateways even though above, I discuss the L3 GW the most. You could have multiple Gateway Pools with different types of gateways, for example, one pool dedicated to L3, or another to S2S, or you could have a mixed pool dedicated to Customer A if you really need this. All in all, though, they are multi-tenant GW pools with separation.

GW Architecture Deployment types:

RAS Gateway Deployment Architecture | Microsoft Learn [learn.microsoft.com]

Layer 3 Forwarding Gateway:

RAS Gateway for Software Defined Networking – Azure Stack HCI | Microsoft Learn [learn.microsoft.com]

Gateway Route Reflector:

Overview of BGP Route Reflector in Azure Stack HCI and Windows Server – Azure Stack HCI | Microsoft Learn [learn.microsoft.com]

Summary:

One of the most significant benefits of Software Defined Networking is its ability to support multitenancy. With the power to scale beyond previous limits, even on shared hardware, this technology is truly a game changer. By enabling customers to scale their network environments more agilely, it delivers deeper levels of security through microsegmentation. Whether you are using VMware NSX or Azure Stack SDN, designing your multitenant environment will have a rolling impact on your overall architecture. I hope you found this information useful!

*The thoughts and opinions in this article are mine and hold no reflect on my employer*